Democracy Technologies: In your recent paper you had a closer look at a very common assumption regarding unequal participation in digital citizen’s participation. Can you tell us about this assumption?

Tiago C. Peixoto: Yes, if you look at both the literature and commentaries on digital participation, or if you attend a conference in the field, one of the questions that is always asked is: “What about those who don’t have access to the Internet?” There is this assumption that whichever group takes part in a process is necessarily the group benefiting the most from it. And that if people are somehow excluded from participating in a process, they won’t benefit from it at all. Lots of the research on tech and participation will look at the demographics of who participates with this assumption in mind. And much of the criticism of e-participation or Civic Tech – the name has changed many times – is linked to this unequal participation. This has been a widely accepted assumption for, I would say, the last 20 years.

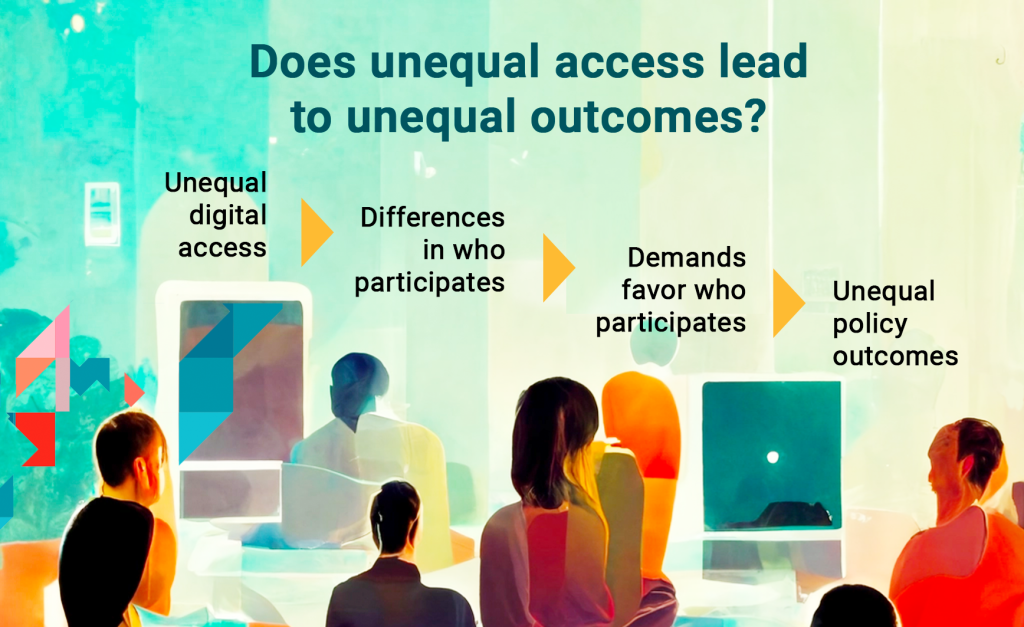

DT: You also describe in this study the assumed mechanism behind this assumption. Can you walk us through this?

Peixoto: The assumed mechanism is that you have a participation chain. First, you have the existing digital divide in a certain country. This should then impact the participant profile. Then that will impact which demands are made through the civic technology platform. Finally, there is the impact on policy outcomes, meaning also an impact on citizens, or those who benefit from the process. This chain is assumed to be linear.

DT: That does all sound very plausible and it’s clear why this tends to be the assumption so far. What made you question this in your research?

Peixoto: We were probably the first to start looking at the effects of internet voting on participatory budgeting processes. There were criticisms very early on with digital participatory budgeting projects, saying that technology could be subverting the process, particularly because participatory budgeting is intended to be a process with redistributive effects: that is, poorer segments of the population should benefit the most from the process. So one of the big critiques was that when you introduce technology you are empowering the already empowered and are actually reverting the redistributive logic of participatory budgeting.

Given that debate, which was not very evidence-based, we decided to ask “does the use of online voting in participatory budgeting change the outcomes of which projects get selected for funding, or not?” We did a large study on the largest participatory budgeting process in the world, in Rio Grande do Sul State, in Brazil. When we examined the data we collected, we actually realized that, no, the use of internet voting did not distort the process so much in terms of who benefited from its outcomes or not. Mostly all the same types of projects were selected for funding, with or without the introduction of online voting, meaning that the beneficiaries of the projects were generally the same.

Then we started to question ourselves and the standard assumption, looking at other types of initiatives, including e-petitions. We looked at Change.org, where we examined petitions created in 132 countries. We found similar things there as well. Similar things in the sense that the assumption that the demographics of who participates necessarily determine who benefits from the outcome, did not hold.

DT: So your overall finding is basically that the assumed mechanism doesn’t really work that way. What factors or mechanisms are at work instead?

Peixoto: What mainly influences how this works is the institutional design that surrounds the process. So for instance, if you look at a participatory budgeting process: when you start the process, you have a first, offline stage, in which you select which types of projects go to the ballot. The criteria for this selection often use what we call a distributive matrix. This has absolutely nothing to do with technology. Which means that who participates and whether online voting is used doesn’t necessarily affect the redistributive nature of the projects that might be selected. The options that are voted for are there due to a redistributive logic from the get-go.

In another case, the constitutional crowdsourcing in Iceland, you do see some distortions in terms of who participates. But ultimately the evidence we reviewed shows that the process had no impact on decision-making. That is, the process was not really consequential because of the surrounding political context. All we are saying here is, it’s less about technology and more about how that technology is going to manifest itself when embedded into institutions. And I think this is an extremely important point that we should be looking at more.

DT: So, assuming that there will always be some degree of inequality in the participation and digital participation project, what can be done in the institutional design of a process to ensure that the outcome is fair?

Peixoto: First of all I would say that you should look at each of the steps that we were talking about – digital divide, profile of the participants, the platform, the demands that are made through the platform – and how that translates into responsive government.

To give you another example – in a different study we were looking at “fix-my-street”, a web-based citizen reporting platform, in the UK. What we found at the time is that – controlling for all other factors such as type, length and scope of demand – if you signed your demand with a male name, you were more likely to get a response than if you signed with a female name. And if you said “please”, you would increase your chances of a response even more. Adding a photo of you would also increase your chances. And so on. So one of the things that one could do, for instance, in terms of institutional design, is to create a list of the most and least gender-inclusive city councils in the UK in terms of their responsiveness. Maybe that would start to trigger some behavior change.

Other times you can test whether the interface itself can produce more inclusive results. In participatory budgeting, if you don’t want to use this redistributive matrix, you could make the projects that are requested from poorer areas appear larger on the screen or appear as the first options on the ballot – a “primacy effect” that should affect the distribution of votes. Whether that works or not is an empirical question. But the point here is about understanding the process all the way through, so you can identify all the points at which you can make the process more inclusive – but then looking at what the data actually says, and iteratively improving the inclusiveness of the process.

Going beyond the cases analyzed in our study, I hope our analytical framework can also help better assess other types of citizen engagement. Take for instance the case of citizen engagement processes in which citizens are selected by sortition, such as citizens’ assemblies. One of the things that we particularly like about these is that they’re expected to be more inclusive, because citizens are either randomly selected or selected through stratification. However, what our findings suggest more broadly is that the demographic composition is just the beginning of a participatory process. For instance, a potential hypothesis is that, once a citizens’ assembly makes recommendations, the actions that the government chooses to pick up could still be those preferred by wealthier citizens, or any other particular group. Whether that happens or not has to be assessed on a case-by-case basis. But again, one of the main takeaway messages from our study is that the entire participation chain needs to be assessed, and not just who participates or not.

DT: Of course, the aim of people advocating for more citizen participation, including digital citizen participation is that as much of it as possible gets picked up by policymakers, and that this translates as directly as possible into politics. As you suggest, that often doesn’t happen. What would you say to policy makers about that?

Peixoto: First of all, if you’re not willing to respond to the outcomes of a participation process, or you’re not willing to make sure that you will take them on board, don’t do it. Secondly, kind of stemming from that, if you ask people whether they want A or B and you don’t have a plan for what to do when they ask for A or B, don’t do it either.

This potential responsiveness gap is amplified by technology because listening and creating platforms for citizens to voice their preferences has never been so easy to do. Technology has facilitated the creation of participation processes a lot, but government capacity or willingness to respond unfortunately hasn’t changed much since before the existence of the internet. So the way that you go about it should be, if you’re not willing to respond, don’t do it.

DT: How would you suggest policy makers should respond in a scenario where the process comes up with an outcome that clearly doesn’t reflect the population?

Peixoto: Yeah, I mean then you have to, unfortunately, rethink your process. If you realise, for instance, that online voting negatively affected the inclusiveness of a participatory budgeting process, that would be an unfortunate result. But governments need to implement the outcome in order not to ruin the credibility of the process – particularly if that process is expected from the very beginning to have a binding effect, and not be just a mere consultation.

This is why it is important that participatory mechanisms have an in-built mechanism of self-correction as they are repeated over time. Participatory budgeting has done this since the very beginning and every other participatory initiative should do too. When you start the next year of a standard participatory budgeting model, it is the first thing that you do. You talk about the corrective measures that you can take to improve the participatory process itself, in order to make it more inclusive or to maximise the public good that is produced. We can’t get participation right all the time, and sometimes there are going to be flaws. That’s why you need to have built-in mechanisms for improving it from the beginning.

But you will only be able to leverage your in-built improvement mechanism if you are collecting data from the process. Only very few digital participatory processes nowadays collect data on all the four stages of the process. I mean, we focused on four cases. It’s not because we just like that number, it’s because there is not enough data out there, because people are either not collecting it or not publishing their data.

DT: Based on your findings, is there any other advice that you would have for people designing a process?

Peixoto: Remember that whichever process or whichever civic technology you are working with, it can inhibit some behaviors and it can encourage others. So you should always be trying to understand how that’s manifesting in practice. And test the process – do pilots, collect data, and iterate. Don’t assume just because it looks good, and you tried to think about everything, that the process will be great. Always be suspicious. Don’t fall in love with your design, and always question yourself on whether your decisions are making a process more or less inclusive.