ChatGPT is the latest iteration of a prototype AI chat program, developed by AI research lab OpenAI.

Released just two weeks ago, it has taken the internet by storm, with more than a million people logging in to probe its many (and varied) capabilities.

Users were confronted with a generational leap forward that pushes the definition of what a so-called ‘chatbot’ can be. Able to do far more than simply answer ordinary questions, ChatGPT has demonstrated an ability to customise answers through training, imitate styles, write half-way convincing long form text, assemble complex information into easy to understand passages, identify issues in code and even write simple software — all in near-perfect natural language.

Of course, we at Democracy Technologies were interested. “What…” we wondered, “…are the implications for democracy…and our work writing about democracy technologies?”, “How is this going to affect the way people learn about and engage with democratic tools, processes and institutions?”

So, we decided to give it a go. These are the results:

Laura – Using ChatGPT to Replace our Expert Interview Partners and Guest Writers

Without the real intention of ever using it that way, I wanted to see if ChatGPT can help us produce content on Democracy Technologies. The idea was to give it both an easy task and a more difficult one. I started out with trying to reproduce one of our simplest pieces of content: The Top 5 Advantages of Digital Participation, by Participation Factory. The first answers were definitely not bad, but I noticed that they were quite general and most of them would have worked almost the same for non-digital citizens’ participation.

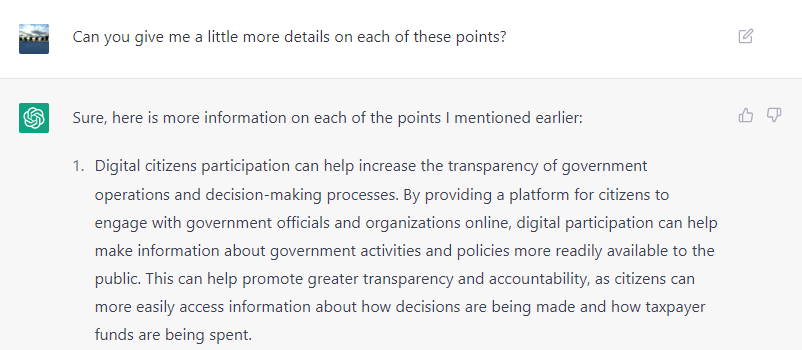

Asking for more details yielded surprisingly good results – although the answers did sound a bit lifeless, and lacked real live examples.

Probing a little bit deeper, I did get better and better answers. Eventually, it also made some points that we hadn’t considered before.

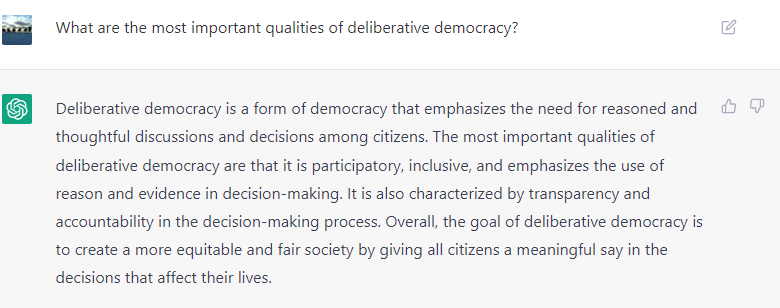

Next, I tried reproducing one of the more complex pieces we published, the Interview with Claudia Chwalisz on Replacing Elections with Deliberation. I asked the exact same questions as in that interview. The answers were ok, but rather superficial and boring in comparison to the real interview:

I found it interesting that my line of questioning let it to argue in favour of sortition based deliberative democracy:

Despite impressive results, ChatGPT was clearly unable to replace our expert interview partners and guest writers. I might still use it in the future to check if we have not missed any obvious arguments on a topic or to give me an idea how an article could be structured.

Daniel – Using ChatGPT as a Civics and Voting Tool

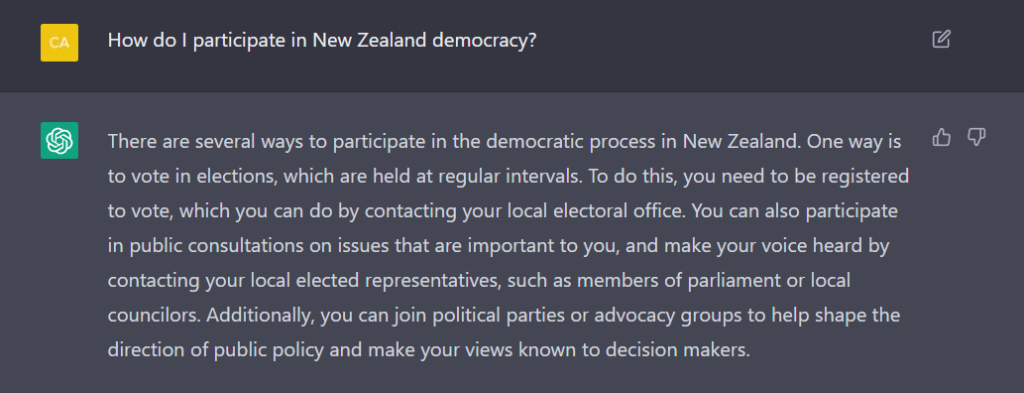

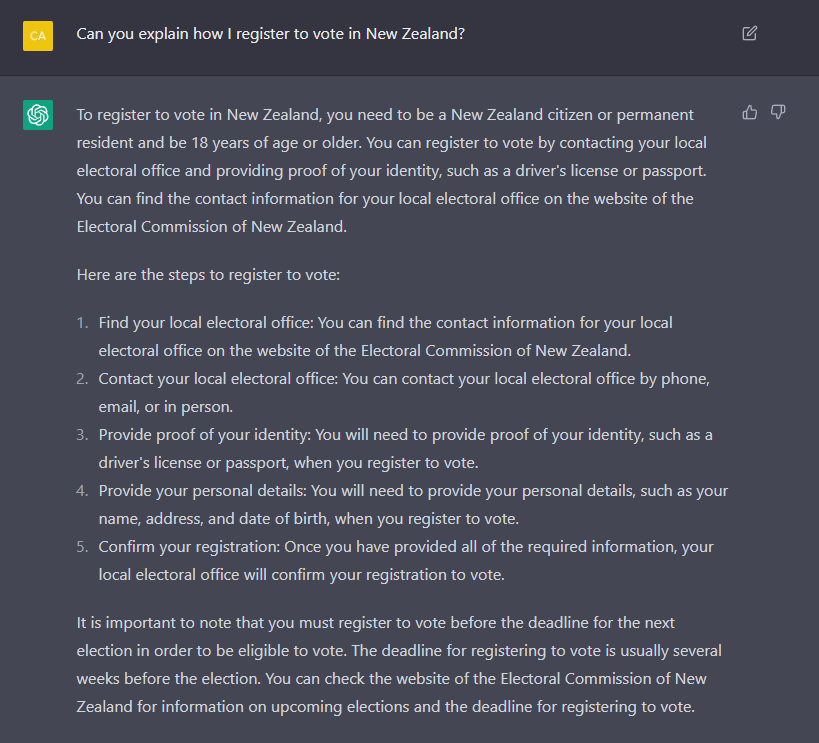

The first thought that came into my mind when loading up ChatGPT was how it might be useful as a civics education tool. Specifically, how it could be used to help people learn about and engage with the democratic process. Coming from New Zealand where civics education leaves something to be desired and comic youtubers fill in the blanks, this is a particularly important topic for me. Whether it’s something as simple as enrolling to vote (where we actually do ok), or actively participating in party politics, an AI that uses natural easy-to-understand language, could make a real difference. This is how it went…

First up, I wanted to know what my general options are for participating in this whole ‘democracy’ business.

The answer, while light on detail, had the benefit of being a succinct summary of the different avenues for engagement. But let’s see how it does when I get a bit more specific.

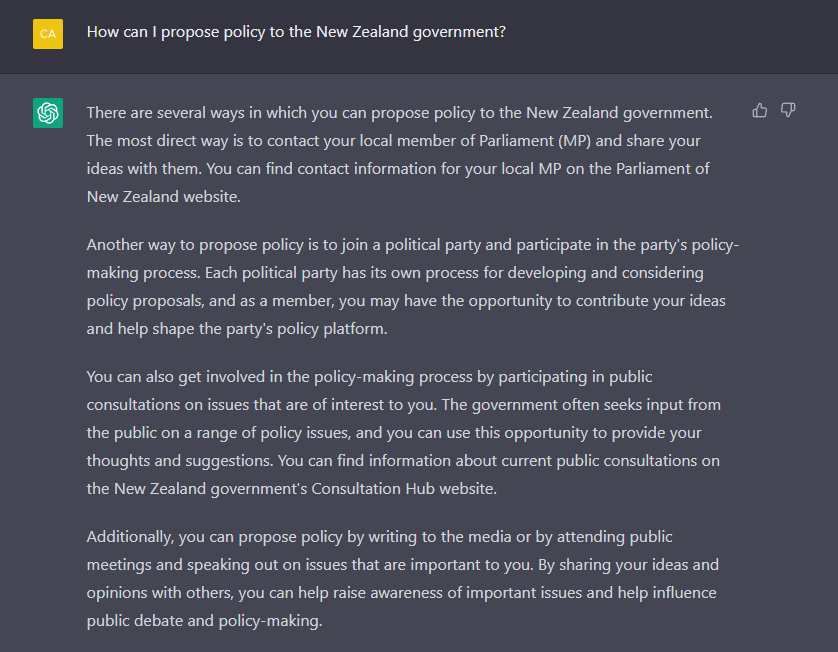

Ok, ok, that’s all fine, but probably something I could just as easily find by going to the website. What about other kinds of engagement?

Again, fairly standard stuff. Basically aggregating different help pages. But what about recommendations? Lots of apps out there seek to improve participation by helping people see what parties line up with their values and policy positions. Let’s see if it can give me advice on what party is best suited to me?

Eh, close enough.

ChatGPT – don’t quit your day job.

Government agencies and political parties – don’t delete your websites just yet.

Graham – Using ChatGPT to Write an Op Ed and Make Political Decisions

Chat GPT is at its best when you ask it for some kind of information. But what happens if we try to use it to formulate an opinion, or even to help us to make important political decisions with far-reaching consequences?

Ask it directly for an opinion, and it gives a very clear answer: “As a language model trained by OpenAI, […] I do not have the ability to express opinions or make decisions.”

Nonetheless, I was curious enough to keep pushing. Could AI write an opinion piece, or even provide the raw material for one? Could it maybe even convince me to rethink my own opinion on a major issue? Since it doesn’t have access to information on events post-2021, I posed it a more general version of the question I addressed in my recent column on Elon Musk’s Twitter takeover:

This is not a million miles away from the argument I made. Notice, however, how carefully worded it is: it is not necessarily a good idea for a single individual to take these decisions alone; it is generally better for a group of people. It always leaves itself the option of backing out of a particular opinion.

The definition of the “group of people” who would be qualified to regulate social media is also very vague, so I asked a more direct follow-up question:

To its credit, this response does a good job of listing some basic pros and cons. Beyond that, however, it relies on the favourite trick of high school students writing essays: “whether public regulation is good or not is a matter of debate and depends on one’s own perspective”. Or “ultimately, the answer to this question may depend on a variety of factors, such as cultural and societal norms, political ideologies, and individual beliefs and values.” In other words: don’t ask me.

Of course, this is a deliberate safety feature – the developers don’t want to see headlines along the lines of “AI Says Social Media Should be in Public Hands”, or “AI Sees no Role for Governments in Social Media Regulation.” Why not, exactly?

I think this highlights a core ambiguity in our collective attitude towards AI. When an individual writes an op-ed, we feel free to disagree; It’s one voice in the discussion. But there’s a tendency to see AI as an authority, or at least as making a claim to giving an answer more authoritative than a human author can. “AI says Social Media regulation belongs in public hands” has a very different ring to it than “Joe Smith says Social Media regulation belongs in public hands.”

Even if the developers are 100% clear that they don’t think their language model is qualified to make decisions for us (and the team behind Chat GPT are very clear on this), we’re apt to get the feeling that the results of AI are being presented as somehow more objective than our own opinions. In reality though, its answers are shaped by the people who created it, who are no more nor less qualified than the rest of us.

It’s reassuring that Chat GPT has no pretensions to taking decisions away from us. Yet as this kind of tool becomes more ubiquitous, we are going to need to learn to be careful in how we interact with it. Chat GPT never tires of reminding you that it is just an AI language model, but not all developers will be this careful to be impartial. The danger lies in it being taken for more than this.

But perhaps I should give Chat GPT the final word:

I couldn’t have said it any better myself.