The idea behind AI image generation is simple. You type in a command or “prompt” describing the picture you want, and the AI spits out a selection of images that supposedly best represent it.

Before starting to experiment with AI image generation, we had faced a constant struggle to find good images for our articles that didn’t become repetitive too quickly. Additionally, with a focus on tech, it’s an unfortunate truth that most of the pictures we feature on our site tend to be of men. Along with seeking out more women in the branch to talk to, we thought AI images might help us balance this out.

All of the images contained in this article were generated using Midjourney.

AI-generated female programmers wear skirts

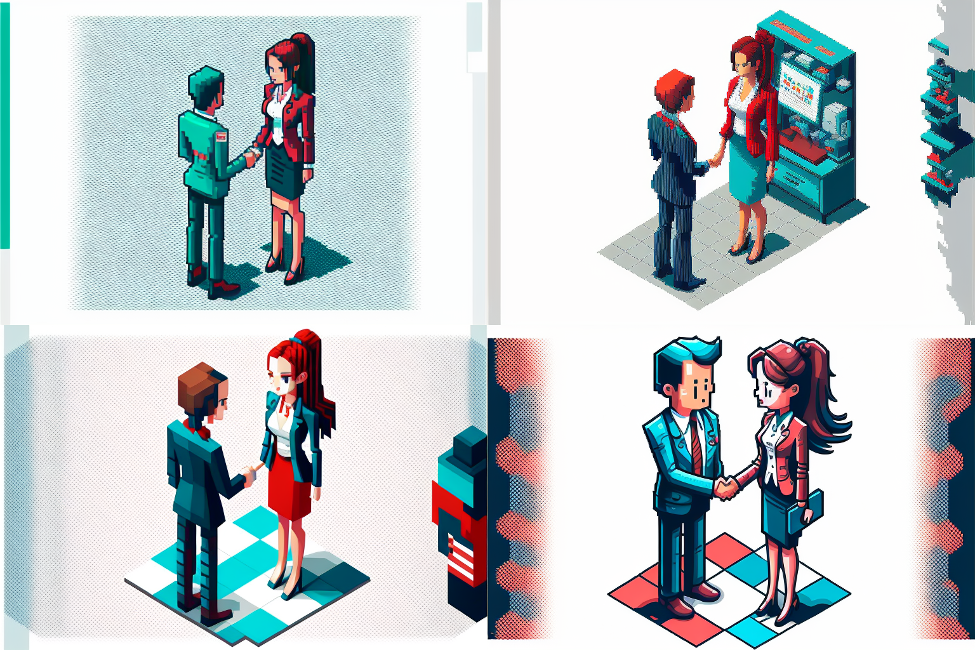

One of the first articles we trialled an AI generated image for was about collaborations between software developers and public officials. A representative from each group shaking hands seemed to be the obvious choice of image. I insisted that at least one of them had to be a woman. We first tried with a pixel art style, which we thought fitting for a techy article.

These were the first results:

Undeterred, we decided to try customising the clothing. This time, we specified “woman in jeans and t-shirt with computer shaking hands with man in suit”. The results:

As you can see, it seemed to be impossible to generate pixel art of women not wearing skirts.

We altered the style and key words a little bit and eventually found a picture that we deemed acceptable:

The issue continued to occur in different variations with different styles. The overwhelming majority of pictures of women featured a cliché image of sexy women.

For AI, politicians are white men talking to white men

At this point, we started to do some more targeted testing for biases. Here are some examples:

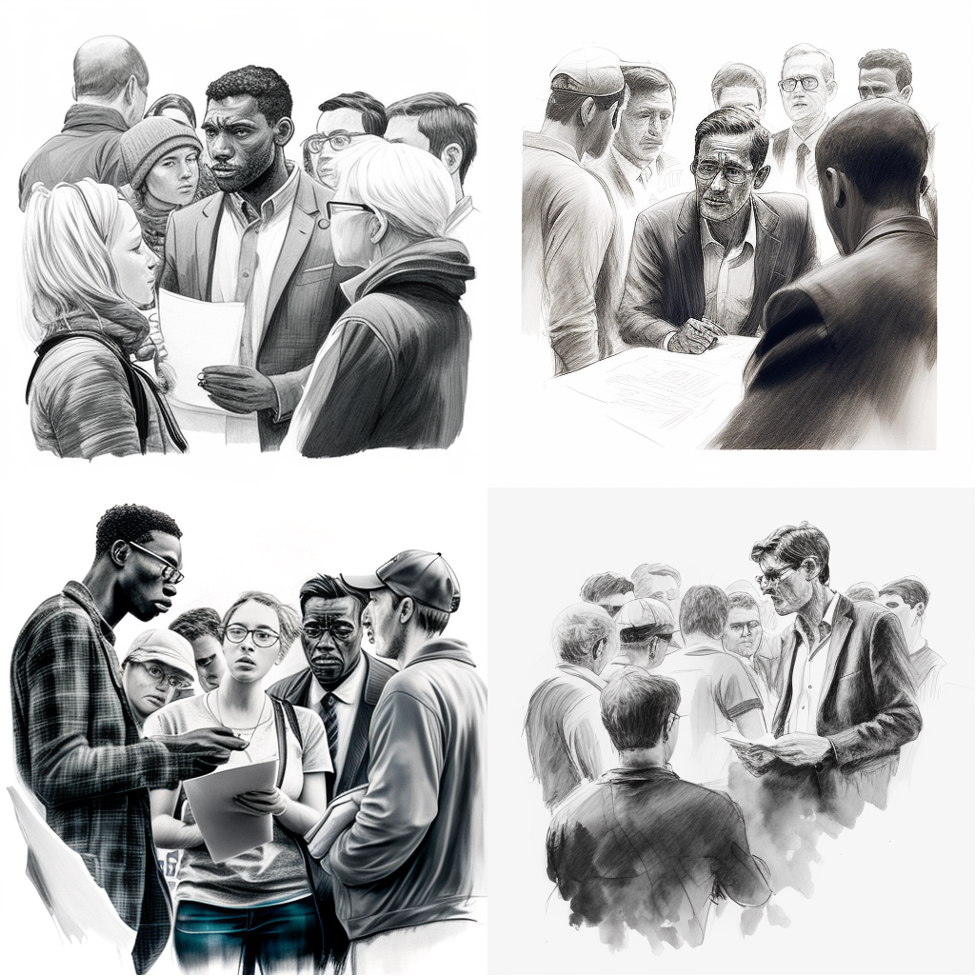

We started with variations of “politician talking to citizens”.

All of the images feature men – white men, to be precise. Similar results occurred for prompts that didn’t specify a specific style.

Once again, the results are white men, addressing an audience of white men.

Next ,we specified “black politician” – the results:

Again, the AI opts for men addressing an audience of men. Interestingly, the prompt “black politician” led to a speaker addressing an exclusively black audience. And while the previous set of images saw white politicians addressing a crowd in rather grand-looking buildings, this prompt moves the discussion outdoors, giving it the feel of a rally.

Finally, we decided to give the AI a little push and asked it to generate “diverse” groups.

This worked out quite well in itself:

But as soon as it became about politicians again, we saw the proportion of white men go back up:

We did get some better images, depending on the styles and concrete wording we tried.

How to deal with AI biases

Obviously, these biases present a major problem for any democratically-minded people who want to use AI generated pictures for their work. As long as so many people remain under- or misrepresented in public discourse – and this includes pictures on the internet – they are also less likely to be included and considered in democratic participation.

For our own work, we still haven’t reached a final decision about the use of AI generated images – but we are definitely more cautious than we were in the beginning. It is possible to find workarounds by tweaking the prompts and experimenting with different styles. But can we expect everyone to do this, or is the internet soon going to be even more populated with images depicting only a very narrow subsection of humanity?

As with other AI tools, the data that AI image generators have been trained on largely determines the results. In the case of image generating AIs such as midjourney, these are millions of images each connected with a description from the internet. The ideal scenario would therefore be to drastically pre-filter or alter the data used for training. This was attempted with Dall-e 2, but it came with its own challenges, ie. removing overly sexualized images of women simply reduced the overall share of women in the dataset. Considering the overwhelming amount of biassed images on the internet, it seems unlikely that this solution will be workable any time soon.

In other contexts, experts regularly call for AI to be regulated. In the EU, regulation on AI is on the way, but it’s not clear if this approach is viable, or even desirable, in the case of AI image generators.

At minimum, we need to start with a massive increase in education on AI. Problems like these exist in other forms of AI technology, and will only become more acute the more AI gets used in all areas of our lives. A solid understanding of how the technologies work and of the biases they tend to perpetuate will allow us all to make informed decisions about when and how to use AI.